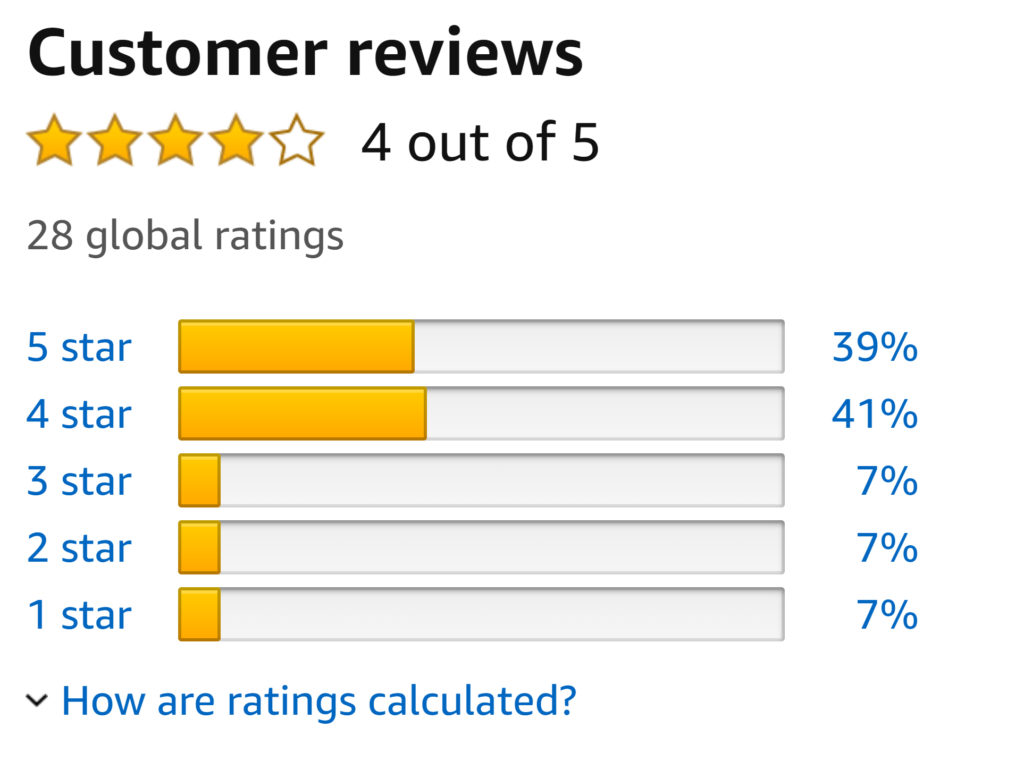

I recently paid for advertising to promote an Amazon free giveaway for The Demon of Histlewick Downs in anticipation of the upcoming release of Hanged Man’s Gambit. I’d hoped the promotion would not only raise awareness of the book, but also increase visibility on Amazon, perhaps even increasing the book’s rank there. So far the book has scored another 5-star review, and 2 more 4-star reviews, and Amazon’s ranking went from 3.9 to 4.0. All good right? Well, not exactly. Though the book has 14 five-star reviews and only 10 four-star reviews, the new ratings had the effect of reversing the lengths of those bars on Amazon’s Customer Reviews chart. It now appears as though Demon has received more four-star ratings than five-star, as shown below:

Amazon, you see, doesn’t rank the books according to the actual data they receive. Instead, they filter that data through their “algorithm.” Their use of the algorithm is reported, but buried on the site. It only shows up when one scrolls down several page’s worth of material if you click on the link that offers to tell you how ratings are calculated (see image above). Of course, most readers will assume, based on the accompanying chart, that they know how rankings are calculated, so they have very little incentive to click that link. If they did, this is what they’d see:

To calculate the overall star rating and percentage breakdown by star, we don’t use a simple average. Instead, our system considers things like how recent a review is and if the reviewer bought the item on Amazon. It also analyzes reviews to verify trustworthiness.

Most people would reasonably assume the cumulative ranking would be based on the number of stars customers gave divided by the total possible number of stars (x5). They also would assume that if a book gets more five-star rankings than four-star, the bar for the five-star ranking would be longer. Turns out, that’s not always the case.

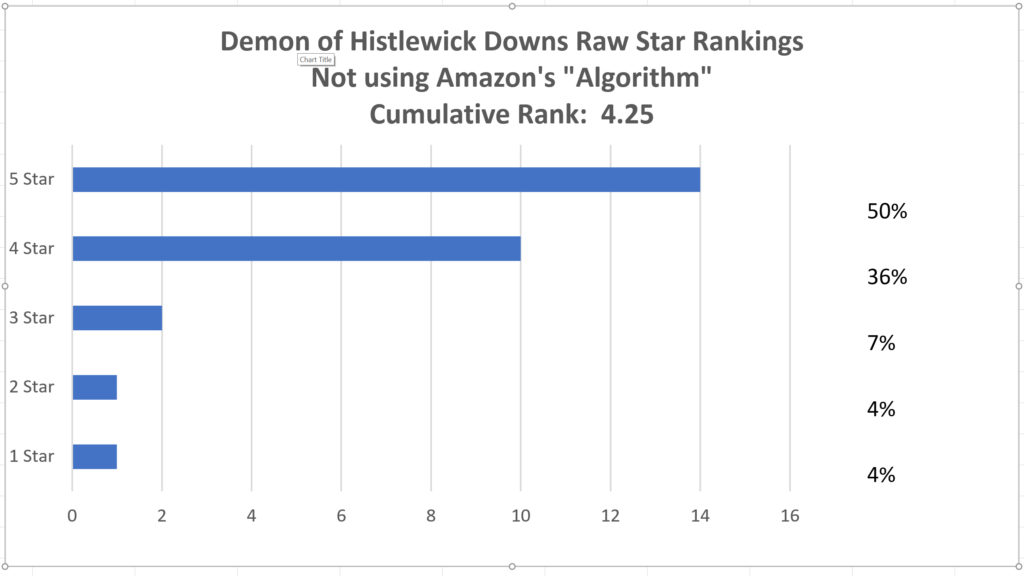

Here’s a graph showing how the calculation would turn out for The Demon of Histlewick Downs using the raw data (uncorrupted by the algorithm).

So, based on a total of 28 ratings, Amazon’s algorithm has decided that the book should rate a full quarter star lower than it rates based on the actual data. It discounts the number of five-star reviews by 11 percent, and it nearly doubled the impact accorded the single one-star review and the single two-star review the book has received. Thus, the algorithm makes those two naysayers twice as influential as any of the rest of the folks rating the book. And it does all that without telling readers how it arrives at that conclusion and without providing a comparison to the raw data. If, at some point, Amazon decides to eliminate the raw data, then regardless of how folks rank them, books will only be as good as Amazon says they are, and there would be no way to contest that determination. They’ve already recently started including “global ratings” that no longer identify who contributed the feedback, so there’s no ability to check how such folks rated other books. Do you really want an algorithm and nameless reviewers determining which books you read? Or would you rather base your decisions on the comments of real people who’ve actually read the book?

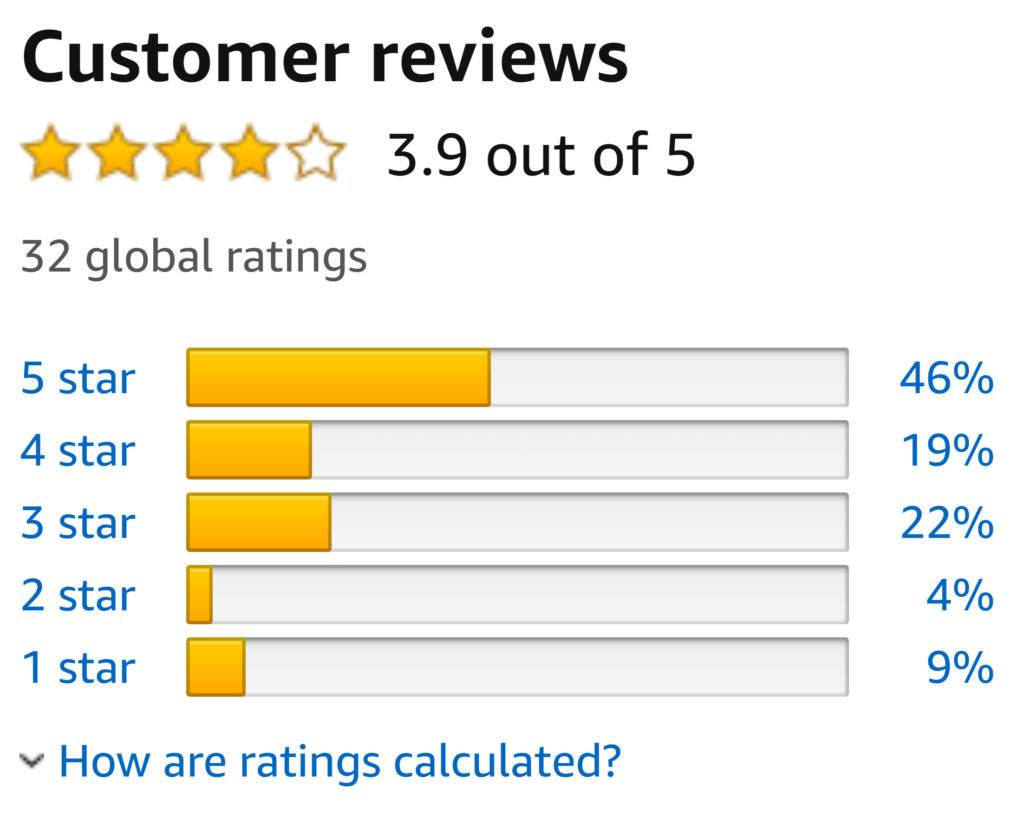

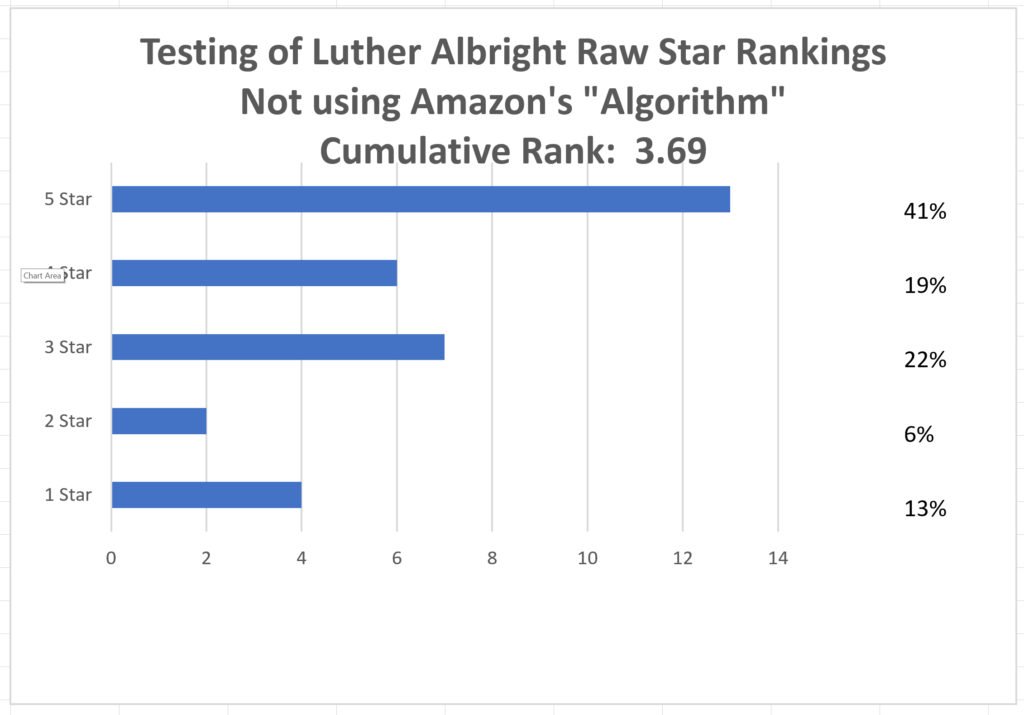

To determine whether Amazon applies the algorithm with an even hand, I checked out a book by Jeff Bezos’s ex-wife, MacKensie. Her book, The Testing of Luther Albright currently has a similar number of star ratings (32) to The Demon of Histlewick downs (28). How does this book fare with Amazon’s algorithm? Here’s what the Amazon Rankings for Luther Albright look like compared to its raw data:

Lo and behold, Luther Albright’s cumulative rating isn’t cut by .25 stars. Rather, Amazon’s algorithm increases this book’s cumulative rating from 3.69 in the raw data to 3.9. To accomplish that feat, Amazon’s algorithm inflates the five-star ratings for MacKensie’s book from 41 percent to 46 percent, while the one-star reviews are decreased from 13 percent to 9 percent. From the raw data, Luther Albright had 6 rankings out of 32 (18.8%) that were under three stars, while The Demon of Histlewick Downs only had 2 such rankings out of 28 (7.1%). Yet, Amazon’s chart shows Luther Albright as totaling 13% for rankings below three, while Demon shows a whopping 14% (which is double the actual data). That means Albright’s 6 critical reviews are actually weighted less than Demon’s 2. What was it about those two reviews that made them so influential to Amazon’s algorithm? There’s no way to know. So, while the raw data suggests The Demon of Histlewick Downs cumulatively ranks a 4.25 compared to Luther Albright’s 3.26, Amazon’s algorithm has decided they’re pretty much the same at 4.0 and 3.9. Indeed, the star graphics for both are indistinguishable.

I might not object to Amazon providing a rating’s filter if they were to put the raw data in the same format side by side and were transparent about how they arrived at the ranking. Then informed readers would be able to compare and decide which ranking system they prefer. Unfortunately, that’s not what they do.

Buyer beware.

(0)Dislikes

(0)Dislikes (0)

(0)